Control System Validation: Key to Automated Food Processing

To maximize the benefit of modern computerized automation, food companies must adopt and properly execute a validation protocol for their digital control systems

Over the past decade, food manufacturers have increasingly adopted computer-based systems for process control. This is primarily due to data accessibility, application flexibility, and low cost provided by microprocessor technology. However, microprocessor-based control systems are more complex than the mechanical and pneumatic devices they replace and require a thorough validation to ensure adequate reliability.

Control system validation is a set of measures to ensure that a new or reengineered control system functions properly according to prescribed guidelines and responds predictably to system disturbances. The lack of such measures has led to costly failures in manufacturing.

Control system validation is a set of measures to ensure that a new or reengineered control system functions properly according to prescribed guidelines and responds predictably to system disturbances. The lack of such measures has led to costly failures in manufacturing.

The most recent widespread example of inadequate computer system validation is the so-called “Y2K bug” that describes the inability of computer programs to correctly interpret the date at the turn of the century. The most recently publicized example in the food industry was a failure in Hershey’s Manufacturing Execution System in October 1999 that was estimated to cost the company $100 million in sales and a 0.5% loss in market share to competitors (Reuters, 1999).

More serious, however, have been less-well-known instances where inadequate control system validation has led to large-scale failures, and in some cases to loss of life. Perhaps the most well-studied incident in this regard occurred with the Therac-25 medical electron accelerator. Between June 1985 and January 1987, a software bug in the system controller led to several deaths and severe injuries from massive radiation overdose (Leveson and Turner, 1993).

The goal of control system validation is to ensure the proper operation of equipment under normal and abnormal conditions. Validation helps to assure product safety. The Food and Drug Administration has long recognized the need for validation of computerized control systems as a core component of total process validation. However, there are still no computer-based system validation guidelines in place for the food industry. In general, only a very small systematic effort has been put forth to prevent problems caused by inadequate validation (McGrath et al., 1998).

Insufficient validation can lead not only to severe economic losses, but, more important, to a potential human health hazard. Both of these should be a major concern to food manufacturers. With increasing reliance on computer systems, the food processing industry is at increased risk of monetary loss unless proper validation guidelines are adopted. By employing strict validation procedures now, food manufacturers will benefit economically by minimizing the number of processing failures and possible recalls due to control system malfunctioning and avert serious failures that could possibly lead to a human health hazard.

This article discusses some of the issues food manufacturers should consider when developing guidelines and standards for their computer-controlled systems.

The FDA Perspective

FDA defines validation as “the establishment of documented evidence, which provides a high degree of assurance that a specific process will consistently produce a product meeting its predetermined specifications and quality attributes” (FDA, 1987a). FDA promotes the concept of control system validation as a part of process validation within the food industry and has established some inspectional guidelines (Guide to Inspections of Computerized Systems in the Food Processing Industry) (FDA, 1998b) for computer-based control systems.

--- PAGE BREAK ---

FDA’s authority to regulate the use of computer control processing systems in food production is provided by Section 402(a)(4) of the Federal Food, Drug and Cosmetic Act, which states that all food needs to be produced in such a way as to make sure that it has not been “prepared, packed, or held under insanitary conditions whereby it may have become contaminated with filth, or whereby it may have been rendered injurious to health.”

Title 21, Part 11 of the Code of Federal Regulations addresses validation for computer systems that are used to create electronic records or electronic signatures (FDA, 1998a). Just as a processor using manual labor would design a food process that is safe, computer-controlled food processing systems need to be designed to ensure that the process does not result in any food becoming injurious to health as a result of the process. If a computer is used to store data required by an FDA regulation during the operation of the equipment, the printout of the data is considered to be an electronic record and the data must be compliant with 21 CFR 11; thus, the system must be validated.

According to FDA (1987b), validation of a computer control system should include proper documentation that the computer program performs all adequate checks for the given process and the performance of the program itself is sufficient for the process. Validation of a computer-based industrial control system should include testing the program at every step of the process and verifying that it properly performs the given task. All test results need to be documented for the initial step in the process, for any steps during the process, and for the final stage.

A number of computer-control systems exist for the low-acid canned food processing industry. During the years that these control systems were developed, FDA also established a procedure by which they were reviewed for compliance with the low-acid canned food regulations (21 CFR 108 and 113). Before the installation of any new computerized system to control any low-acid canned food process, the Regulatory Food Processing and Technology Branch of FDA’s Center for Food Safety and Applied Nutrition (CFSAN) asks the manufacturer of the equipment to submit it for review before actual use in the production process. If the system appears satisfactory, CFSAN sends a letter to the vendor or user stating that the system meets the intent of the current regulations and therefore can be used for low-acid canned food processing.

Hardware Validation

Hardware validation is fundamental to electronic control system validation and includes such issues as sensor selection, signal conditioning, and input/output (I/O) device interfacing. All sensors and signal conditioners should be carefully selected to provide the computer interface with accurate data over the possible range of process conditions.

For example, not all transmitters provide the same level of precision, even with the same sensor attached. Relatively cheap 8-bit analog-to-digital (AD) converters are not as precise as more expensive 12- or 16-bit AD converters. The process of choosing an appropriate AD converter should be driven not by price but rather by the precision required by the process. Failure modes should be set so that in the event of a sensor or transmitter failure the process goes to a safe condition.

Sensors used by the control system must be routinely checked for accuracy and precision. It is very important to ensure that accuracy is achieved over the required operating range of the sensor. FDA suggests performing sensor calibration on a schedule using a minimum-maintenance approach. This means that the period between calibrations should not exceed the time for a sensor to lose accuracy to the point that the output is not compliant with process safety margins (FDA, 1998a). Appropriate security measures must be implemented to prevent unauthorized adjustments to sensor configuration and calibration settings.

--- PAGE BREAK ---

Software Validation

Software validation is perhaps the most time-consuming part of the entire control system validation process. Because of the variety of available control system software products, operating systems, and communication protocols, it is also difficult and thus somewhat expensive. We suggest simplifying the software development process to the following series of basic steps, and applying the validation concept to each step.

• Software Design. Software validation begins at the design phase and is divided into the following general steps based on the given requirements for the program: choosing an appropriate algorithm; designing the data structure, program architecture, and interface; and performing a safety analysis.

The key to creating a well-designed program is to incorporate functional independence into the software. The level of functional independence can be measured by two parameters: module cohesiveness and coupling. Cohesiveness means that the module performs a certain logical task with little or no interaction with other program modules. Module cohesiveness is a guiding principle behind object-oriented programming and allows components of software code to be validated independently. One unified software component can be reused without the need for reverification. Coupling is an indicator of module interconnections inside the program. Ideally, to create a well-designed software architecture, cohesiveness should be maximized and coupling minimized.

The effort required for software quality assurance directly corresponds to program complexity. The more complex the program is, the more difficult it is to test and verify its adequacy. Grady (1992) suggests looking at the program complexity as a combination of data complexity, structural complexity, and procedural complexity. Data complexity is measured by the number of inputs and output variables in the system. Structural complexity is measured by each module fan-out, the number of modules that directly subordinate a particular module. Procedural complexity is measured by the total number of possible decisions. The goal of software complexity analysis is to minimize the complexity to an acceptable level. For example, structural complexity is usually considered acceptable at a module fan-out level of not more than 5–10.

Most modern control systems use a human–machine interface (HMI) to visualize the interaction between the user and the control system. Interface design is one of the most critical parts of the software design phase. Leveson (1995) identified several important issues associated with the control system interface design: system response time, user help completeness and clarity, error/alarm information handling, and ease of operation.

The final validation step in the software design phase is safety analysis. The entire range of possible inputs within each subroutine should be tested and its output validated. This is especially important for the software-controlled steps that are considered critical control points of the process. All “safety nets” implemented in code to handle unexpected errors should be fully tested and verified to assure that they behave as designed.

• Software Implementation. There are several important issues in the implementation phase related to validation. The program code needs to be as simple as possible without reducing its efficiency. Most software engineers have been trained to write software using as few instructions as possible to accomplish program objectives. However, this approach usually adds complexity to the program. Since most of the modern microprocessor-based systems can operate at very high speeds and can handle large programs, it is more prudent for control system validation purposes to develop software code that is simple and easy to debug rather than develop code that is complex in nature. The program should be well structured and thoroughly documented.

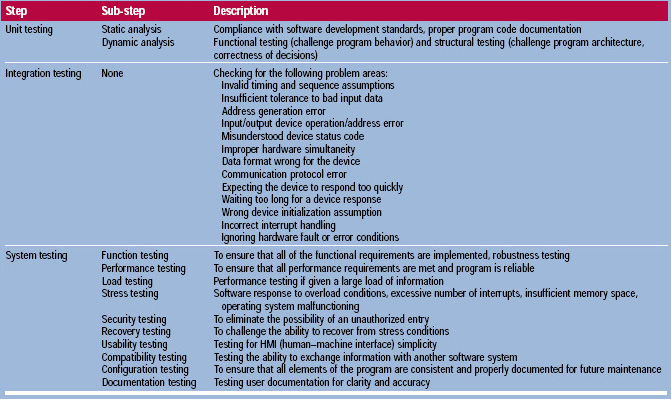

• Software Testing. This is perhaps the most important step in software validation, since it is the final stage before the program will face real-world tasks. FDA (1998c) suggests dividing software testing into a series of steps: unit testing, integration testing, and system testing. Unit testing means that each individual module in the program is tested independently to ensure its adequacy. Integration testing is combining modules together into larger and larger objects and testing them until the entire program structure has been covered. System testing looks at the entire program as a logical entity, and examines its compatibility with the intended environment. Further information about each of these steps is included in Table 1.

--- PAGE BREAK ---

Suzuki (1996) suggests prioritizing the level of validation with the level of criticality performed by the software. The most critical areas of the process under software control should be more intensively tested and documented than less-critical steps.

Three general types of tests can be done to ensure software integrity, safety, quality, and reliability:

Functional (“Black-Box”) Tests. These tests evaluate software interaction with the environment. Here, the program is viewed as a black box with inputs and outputs. Its response to a specific input to the program is analyzed for correct behavior without analysis of the program’s internal code and logic flow. Myers (1979) strongly suggests testing for unexpected inputs as well as for inputs expected under normal circumstances. Giving an unexpected input to the program can demonstrate its reliability. The advantage of “black-box” testing is that it characterizes the behavior of the software under the environmental conditions for which it is intended. The main disadvantage is that although the program provides a correct output in response to its input, such output may be generated through a wrong series of logical operations. Such logic errors, if they exist, will remain undetected.

Structural (“White-Box”) Tests. These tests analyze the program code design in detail to challenge the logic of the software. In structural testing, each possible decision path in the program must be executed at least once to uncover all unexpected outcomes. The two essential parts of structural testing are data flow testing (Beizer, 1990) and loop testing (Myers, 1979). Both methods trace the path of each individual variable in the program as it goes through a series of logical loops and continues its way toward the target destination inside the program code design. This technique gives the software designer the advantage of tracing the logical flows inside the software code, thus ensuring that there are no dead ends or never-ending loops that may result in wrong logical decisions. Since structural tests do not consider the relationship between the software and the environment, this method should be used in conjunction with functional tests.

Random Tests. These tests involve challenging the system by random inputs and analyzing its response. This method is generally considered ineffective unless associated with statistical analysis. The statistical technique was first presented by Hecht (1995) and uses the random data taken from statistically defined distributions. The main disadvantage of this method is that it requires a stable software product, so functional and structural testing must be completed prior to conducting the statistical testing. The advantage of this technique is that it reduces the possibility of missing rare operating conditions overlooked by program designers.

We recommend using a combination of the above techniques to ensure software safety, quality, and reliability. All software tests require proper documentation, so the acquired information can be used later during software maintenance.

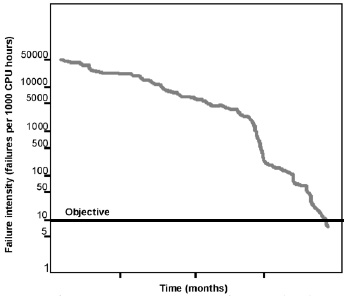

Musa (1989) showed that while it is virtually impossible to eliminate all the possible software errors, it is possible to set a margin for software failure intensity below which the system is considered to perform normally. Musa draws a clear distinction between software failure and software fault: a failure is any situation where a program does not function so as to meet user requirements, whereas a fault is an actual defect in the software code that could lead to program malfunction. The failure intensity level can be developed, so that by comparing the actual failure intensity and the desired level, the creators could evaluate the program performance. After the desired level of failures per unit of time is reached, software is considered to perform normally. An example of accomplishing the desired software reliability is shown in Fig. 1.

--- PAGE BREAK ---

Musa (1989) suggests measuring software reliability as number of failures per 1,000 working hours of central processing unit (CPU) time, with an acceptable level of 10. An equally valid criterion for establishing software quality would be defects per 1,000 lines of program code, with an acceptable level of 0.005.

• Operation and Maintenance. Validation is also important during the operation and maintenance phase. Software performance should always be monitored and any errors and unexpected incidents properly documented and analyzed. Any software modification made during this phase needs to receive the same scrutiny as that used during the initial software design, since the program code integrity has changed. To avoid any complications, all program modifications should be performed in the same manner as software design, and be properly validated to provide sufficient quality assurance.

• Documentation. All software test results should be documented as described in ANSI/IEEE Standard 829-1983, “Software Test Documentation.” Some standards also apply to software quality assurance (ANSI/IEEE Standard 730-1984), software configuration (ANSI/IEEE Standard 828-1983), and software requirements specifications (ANSI/IEEE Standard 830-1984). These standards should be followed to provide a safe, high-quality, and efficient software product.

System Validation

In addition to hardware and software validation, it is absolutely necessary to perform control system validation as an integrative entity. System validation can be performed using the same techniques as for software validation, namely, functional and structural testing.

To better understand the system organization for structural testing, FDA (1998b) suggests creating a piping and instrumentation drawing (P&ID) of the system prior to its validation. All of the individual system components should be indicated in the scheme. Not only it is useful to create a scheme of the core parts of the system, such as central control unit, input and output block, and possible network connections, but it is also important to indicate specific types and models of all components and I/O devices. Having the scheme ready, it becomes much easier to track all possible interaction between the software and hardware components.

Before the actual system installation, several important factors need to be taken into consideration. FDA (1998b) suggests that a survey of the processing area be conducted to assure that none of the control system components are located in environments for which they are not designed. Placing control system components in areas of high temperature, humidity, static, and electromagnetic interference should generally be avoided.

Signal corruption is another concern with electronic control systems. Signal degradation is possible if low-voltage signals are transmitted through areas of high electromagnetic interference and over long distances. Sources of electromagnetic interference include motors, portable cell phones and radios, and high-voltage power lines. This problem can be avoided by using shielded cables or fiberoptic lines for signal transmission.

If analog electronic technology is used for communications between various control system components, the signal should be transmitted in the form of current, not voltage. Newer digital transmission protocols, such as Foundation Fieldbus (Fieldbus Foundation, Austin, Tex.), are often more robust than analog communications, and provide access to sensor diagnostics information.

Uninterruptable power supply (UPS) systems should be used to provide emergency power to electronic control systems in critical processing areas and those components that require soft shutdown. In this way, the sudden failure of power-sensitive components can be prevented.

--- PAGE BREAK ---

Backup systems are an essential part of every computer-based control system and can be implemented through software, hardware, or both. While it is not necessary to keep paper copies of all records, sufficient measures must be put in place to assure the integrity of all process data collected, stored, and maintained electronically. As indicated above, computer systems used to maintain electronic records for food manufacturing operations must meet all the requirements of 21 CFR 11 pertaining to food processing (i.e., parts 106, 108, 110, 113, 114, and 123).

For electronic record-keeping systems, the establishment of a sufficient level of system security is also a very important part of control system validation. Because it is extremely critical, FDA requires that the software be protected from unauthorized usage, so that none of the records can be altered.

Only after a control system has been validated and considered to be working properly in a safe and efficient manner should personnel who would be operating it be trained. Each individual who will be responsible for working with the system should receive proper training. It is also important to divide the responsibility for operation and maintenance of the system among various departments.

FDA (1998b) emphasizes that it is necessary to train employees not only how to work with the system but also how to recognize and respond to any software or hardware error. Personnel should be acquainted with all system alarms that may occur during operation.

Validation Management

There are three general approaches to managing control system validation: prospective, retrospective, and real-time validation. The terms “prospective” and “retrospective” validation were originally introduced by FDA in the Guidelines on General Principles of Process Validation (FDA, 1987b).

• Prospective Validation. This is called for either when an entirely new control system is going to be implemented or when there is a change in the existing control system that may affect the process behavior (revalidation).

After the equipment has been selected, it should be tested to verify that it is capable of operating satisfactorily within the operating limits required by the desired process. This phase may include examination of individual control system components, and determination of any calibrations, adjustment, and maintenance requirements. It may also include identification of the critical equipment features that could affect the desired process and quality or safety of the final product. The procedures for all required calibrations and adjustments for post-installation maintenance should be well documented and fully described. All repair documentation that will be required should also be prepared. At this phase, a thorough software, hardware, and sensor validation should be performed according to the guidelines described above.

Evaluation of the equipment during the prospective validation should not solely rely on the equipment supplier’s representation, but be based on theoretical and practical engineering principles. All tests done with the equipment should show that it is reliable under worst-case situations. If any of the tests fail, a thorough examination should be performed to identify the cause of the failure, which may be a process deviation, a hardware malfunction, or a software error.

Functional and structural testing should be done at this phase to adequately demonstrate the effectiveness of the system.

Prospective control system validation can be very cost effective because it dramatically reduces the dependence on intensive post-installation and in-process testing. Quality, safety, and effectiveness can be actually designed and built into the control system.

--- PAGE BREAK ---

• Retrospective Validation. This applies when the control of the production line has already been designed and established, with the product already in distribution, but the sufficient control system validation procedure has never been performed. It is performed in the same manner as prospective validation, although it requires intense post-installation and in-process hardware, software, and system testing.

• Real-Time Validation. Computers have recently become more powerful, and many companies are beginning to embed supervisory control systems with intelligent capabilities that can analyze and validate processes in real time. Although not explicitly mentioned in the Pharmaceutical Manufacturers Association’s life-cycle approach (see below), real-time validation embodies the same spirit as given by that approach and serves as a powerful mechanism to aid in its implementation.

There are two main approaches to perform a real-time validation: hardware redundancy and analytical redundancy. Hardware redundancy requires several input devices to monitor each parameter of the controlled manufacturing process. The generated signals are then compared using different techniques to provide the system with an accurate and precise input. Analytical redundancy involves comparing the input signal value with an estimate calculated from a previously determined correlation of a given parameter with other parameters monitored by other sensor devices.

PMA Life-Cycle Approach

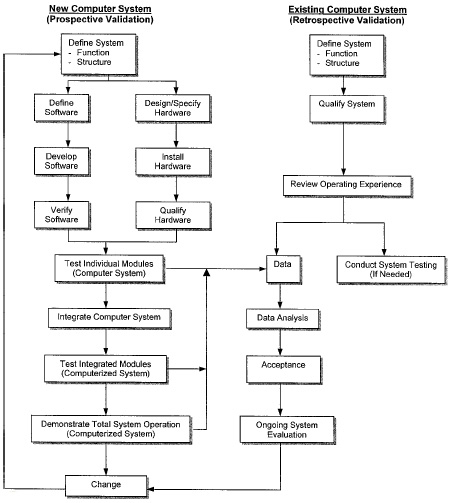

In 1986, PMA’s Computer Systems Validation Committee developed a technique called life-cycle approach for the validation of computer-based automation systems. This technique covers both prospective validation and retrospective validation. Fig. 2 illustrates the steps required to perform a prospective validation for a new system and how it differs from a retrospective validation for an existing system.

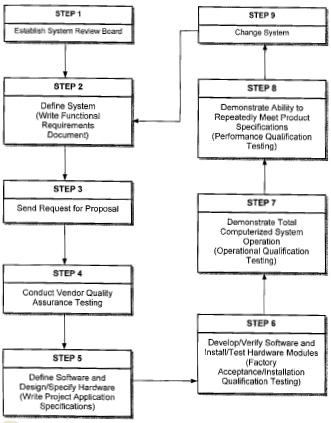

McKinstry et al. (1994) modified the life-cycle approach for computer system validation so it could be more widely used in manufacturing and be easily customized. Fig. 3 shows the modified life-cycle approach, which consists of nine basic steps corresponding to the phases defined by PMA (Fig. 2). The major contribution by McKinstry et al. is the assignment of responsibility for creation, implementation, maintenance, and management of validation procedures.

Validation Protocol

The validation protocol has been described as a written plan stating how validation procedures will be conducted (FDA, 1987b). The life-cycle approach can provide a basis for developing the validation protocol. Validation protocols should be developed individually for prospective and retrospective validation and should include test procedures and parameters, desired process characteristics, and description of production and computer control system equipment. Decision points that will indicate whether test results are acceptable or not should also be included. The developed protocol should specify a sufficient number of replicate process runs to demonstrate reproducibility and provide an accurate measure of variability among runs.

Perhaps the best way to create a validation protocol is to make an easy-to-follow checklist that includes separate sections for hardware and software configuration, process performance, communications, and challenge tests to retrieve possible weaknesses of the control system.

--- PAGE BREAK ---

The validation protocol should not only target the initial control system design but also include provisions for operating wear and tear. Therefore, validation procedures should include schedules for periodic maintenance and parts replacement over time. A maintenance log for the equipment needs to be kept during the manufacturing process, because it can be very useful later for various failure investigations. In addition, a revalidation protocol should be designed as a part of the prospective validation protocol.

FDA (1998b) also recommends creating a procedure for personnel response in the event of a process alarm. This should be documented as a part of the protocol for computer control system validation.

Using a Third-Party System

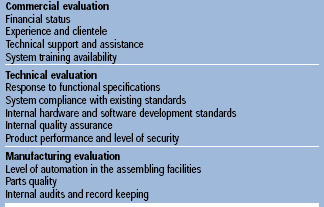

When selecting third-party software or hardware for computerized control systems, vendor selection is the first step. Abel (1993) suggests auditing possible vendors by going through a checklist of critical questions to analyze the factors presented in Table 2. The vendor should provide the client with validation documents covering all the steps that were made to ensure that the proposed control system will meet all the current government regulations and customer requirements and will function properly through its entire life cycle.

The end user can use the vendor’s compliance to various hardware and software development standards as a criterion to judge third-party software and hardware products. For example, software products can be specified to be compliant with IEC standard 1131-3 (programming languages) or to use the Object linking and Embedding for Process Control (EPC) standard for Active-X controls, etc.

As suggested by Eastwick (1995), validation and verification of off-the-shelf software consists primarily of three core parts: (1) it is assumed that the third-party software package is already validated and verified by the manufacturer, and complies with the existing standards for software validation; (2) the installed package should be completely customized for the process and properly documented; and (3) the software should be tested to determine its fault tolerance (criticality). All of the above steps should be performed with close interaction by both the vendor and the customer, since only the latter might know all the critical factors of the automated process (Eastwick, 1995).

Recommendations

Given the current level of automation in the food industry, we can make the following recommendations:

A specific control system validation plan should be established by all food manufacturers. This plan should be based on the modified PMA life-cycle approach. The personnel responsible for implementing this plan form a validation team that will develop protocols and implement inspections as necessary.

Protocols should be developed using existing standards (e.g., ISO/IEC 12207 Standard for Information Technology). The advantage of using this standard is its international recognition and applicability to various areas of manufacturing.

Purdue University’s Computer Integrated Food Manufacturing Center is currently reviewing standards and procedures set forth by other manufacturing sectors for applicability to food manufacturing. These reviews, in combination with validation tests conducted on computer-controlled unit operations, will provide additional data to make more specific recommendations in the future.

by SASHA V. ILYUKHIN, TIMOTHY A. HALEY, AND JOHN W. LARKIN

Author Ilyukhin is Graduate Research Assistant, and author Haley is Assistant Professor and Director, Computer Integrated Food Manufacturing Center, Purdue University, 1160 Food Science Bldg., West Lafayette, IN 47906. Author Larkin is Branch Chief, National Center for Food Safety and Technology, Food and Drug Administration, 6502 Archer Rd., Summit-Argo, IL 60501. Send reprint requests to author Haley.

Edited by Neil H. Mermelstein,

Senior Editor

References

Abel, J.T. 1993. Computer system validation: Questions for the audit. Pharm. Eng. 13: 50-59.

ANSI/IEEE. 1983. Software configuration, Standard 828. http://webstore.ansi.org/ansidocstore/stdreq.asp.

ANSI/IEEE. 1983.Software test documentation, Standard 829. http://webstore.ansi.org/ansidocstore/stdreq.asp.

ANSI/IEEE. 1984. Software quality assurance, Standard 730. http://webstore.ansi.org/ansidocstore/stdreq.asp.

ANSI/IEEE. 1984. Software requirements specifications, Standard 830. http://webstore.ansi.org/ansidocstore/stdreq.asp.

Beizer, B. 1990. “Software Testing Techniques,” 2nd ed. Van Nostrand Reinhold, New York.

Deitz, D.L. and Herald, C.J. 1993. Computer systems validation and software development process. ISA: Transactions 32: 65-73.

Eastwick, M.1995. Verification and validation of off-the-shelf software. Med. Device Diagnostic Ind. 17: 90-96.

FDA. 1987a. Software development activities. Office of Regulatory Affairs, Food and Drug Admin., Rockville, Md.

FDA. 1987b. Guideline on general principles of process validation. Office of Compliance, Center for Devices and Radiological Health and Center for Drugs and Biologics, Food and Drug Admin., Rockville, Md.

FDA. 1998a. Code of Federal Regulations, Part II. Electronic records; Electronic signatures. www.access.gpo.gov/nara/cfr/waisidx_98/21cfr11_98.html.

FDA. 1998b. Guide to inspections of computerized systems in the food processing industry. www.fda.gov/ora/inspect_ref/igs/foodcomp.html.

FDA. 1998c. Software testing effort. Div. of Emergency and Investigational Operations, Office of Regulatory Affairs, Food and Drug Admin., Rockville, Md.

Grady, R.B. 1992. “Practical Software Metrics for Project Management and Process Improvement.” PTR Prentice-Hall Inc., Upper Saddle River, N.J.

Hecht, H. 1995. Verification and validation guidelines for high integrity systems. NUREG/CR-6293. Prepared for U.S. Nuclear Regulatory Commission, Washington, D.C.

Homepage for ActiveX controls. www.microsoft.com/com/tech/activex.asp.

IEC 1131. Programming standard. www.iec.org. IEEE/IEC 12207. 1998. Standard for information technology. www.ieee.org.

Leveson, N.G. 1995. “Safeware, System Safety and Computers.” Addison-Wesley Inc., New York.

Leveson, N. and Turner, C. 1993. An investigation of the Therac-25 accidents. IEEE: Trans. Software Eng. 18(7): 18-41.

McGrath, M., Gaiser, M., and O’Connor, B. 1998. The implications and impact of the year 2000 on the food process industry. J. Food Eng. 38(1): 87-100

McKinstry, P.L., Atwong C.T., and Atwong, M.A. 1994. An application of a life cycle approach to computer system validation. Pharm. Eng. 14: 46-50.

Musa, J.D. 1989. Tools for measuring software reliability. IEEE Spectrum 18: 39-42.

Myers, G.J. 1979. “The Art of Software Testing.” John Wiley and Sons, New York.

OPC Foundation Web site. www.opcfoundation.org.

Reuters. 1999. Hershey haunted by computer glitch. Reuters News Service, Oct. 29, 12:50 p.m. p.t. http://news.cnet.com/news/0-1008-200-1425315.html.

Suzuki, J.K. 1996. Documenting the software validation of computer-controlled devices and manufacturing processes: A guide for small manufacturers. Med. Device Diagnostic Ind. 18: 218-227.